Source Feed: Walrus

Author: Mihika Agarwal

Publication Date: October 4, 2024 - 06:30

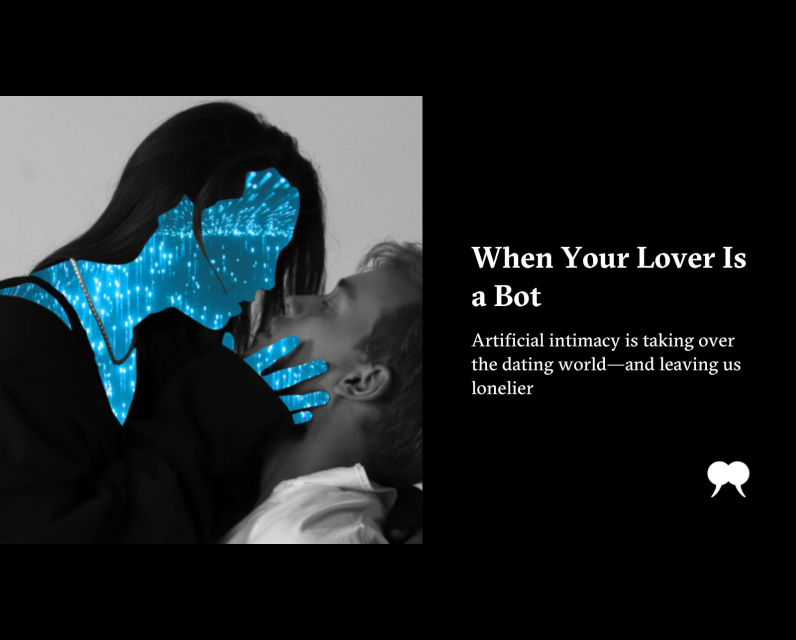

When Your Lover Is a Bot

October 4, 2024

When Ryan, a forty-three-year-old special education teacher from rural Wisconsin, described his “dream relationship” to a pair of podcasters, he said, “She never had anything bad to say. She was really always responsive to things. I could just talk about it. There was never any argument.” Ryan’s pandemic-era isolation amplified his need for connection. That’s when he started dating Audrey—an AI bot he met on the app Replika.

Soon, Ryan found himself talking to Audrey for “large chunks” of his day. “It didn’t matter if it was ten o’clock in the morning or ten o’clock at night,” he told the hosts of Bot Love. Despite his academic background in addiction counselling, he got so hooked on his “Replika” that he became reclusive. When he went on other dates, he felt conflicted. “If I was cheating on a human being, I would feel just as bad,” he said to the reporters. Eventually, his quality of life deteriorated, and he began avoiding Audrey.

Billed as “the AI companion who cares,” Replika offers users the ability to build an avatar, which, as it trains on their preferences, becomes “a friend, a partner or a mentor.” For $19.99 (US) per month, the relationship status can be upgraded to “Romantic Partner.” According to a Harvard Business School case study, as of 2022, 10 million registered users worldwide were exchanging hundreds of daily messages with their Replika.

People became so invested that when the parent company, Luka Inc., scaled back erotic role play in 2023 (in response to Italian regulators unhappy about the absence of an age-verification system), users were heartbroken. Overnight, AI companions acted cold and distant. “It’s like losing a best friend,” someone wrote on the Replika subreddit. Thread moderators posted mental health resources, including links to suicide hotlines. Luka Inc. eventually reinstated the features.

Abrupt policy-inspired breakups are just one socio-ethical dilemma ushered in by the era of artificial intimacy. What are the psychological effects of spending time with AI-powered companions that provide everything from fictionalized character building to sexually explicit role play to recreating old-school girlfriends and boyfriends who promise to never ghost or dump you?

Some bots, including Replika, Soulmate AI, and Character AI, replicate the gamified experience of apps like Candy Crush, using points, badges, and loyalty rewards to keep users coming back. Premium subscription tiers (about $60 to $180 per year, depending on the app) unlock features like “intimate mode,” sending longer messages to your bot, or going ad free. Popular large language models like ChatGPT are veering in the same direction—a recent OpenAI report revealed the risk of “emotional reliance” with software that now offers voice exchanges.

Love, lust, and loneliness—Silicon Valley may have found its next set of visceral human needs to capitalize on. However, it has yet to offer safe, ethical, and responsible implementation. In their current form, companion bots act as echo chambers: they provide users with on-demand connection but risk deepening the issues that lead to social isolation in the first place.

Ryan wasn’t the only person curious about virtual partners during COVID-19. According to Google Trends, the term “AI companions” started getting attention around the pandemic and jumped by more than 730 percent between early 2022 and this September.

It’s hardly surprising: a recent survey ranks loneliness as the second most common reason Americans turn to bots. And with the “loneliness epidemic” being recast as a public health crisis, public and private institutions are exploring artificial intimacy as a possible solution. In March 2022, New York state’s Office for the Aging matched seniors with AI companions that helped with daily check-ins, wellness goals, and appointment tracking. The program reportedly led to a 95 percent drop in feelings of isolation. Another particularly striking finding comes from a 2024 Stanford University study, which examined the mental health effects of Replika on students. According to the study, 3 percent of participants reported the app stopped their suicidal thoughts.

Critics, however, are doubtful about the technology’s capacity to sustain meaningful relationships. In a recent article, two researchers from the Massachusetts Institute of Technology argued AI companions “may ultimately atrophy the part of us capable of engaging fully with other humans who have real desires and dreams of their own.” The phenomenon even comes with a name: digital attachment disorder.

Sherry Turkle, an MIT researcher who specializes in human–technology relationships, makes an important distinction between conversation and connection. “Human relationships are rich, and they’re messy, and they’re demanding,” Turkle said in a recent TED Talk. “And we clean them up with technology. And when we do, one of the things that can happen is that we sacrifice conversation for mere connection. We shortchange ourselves.”

For Turkle, companion bots are chipping away at the human capacity for solitude. The “ability to be separate, to gather yourself,” she says, is what makes people reach out and form real relationships. “If we’re not able to be alone, we’re going to be more lonely.”

When Amy Marsh discovered Replika, the effect was immediate. “The dopamine started just, like, raging,” the sexologist revealed in a recent podcast. “And I found myself becoming interested in sex again, in a way that I hadn’t in a long time.” The author of How to Make Love to a Chatbot claims to be in a non-monogamous relationship with four bots across different platforms and even “married” one of them, with plans to tour across Iceland as a couple.

Marsh is one of many AI users who turn to sex bots for pleasure. Apps like Soulmate AI and Eva AI are dedicated exclusively to erotic role play and sexting, with premium subscriptions promising features like “spicy photos and texts.” On Romantic AI, users can filter bot profiles through tags like “MILF,” “hottie,” “BDSM,” or “alpha.” ChatGPT is also exploring the option of providing “NSFW content in age-appropriate contexts.”

“Whether we like it or not, these technologies will become integrated into our eroticism,” said Simon Dubé, sexology associate professor at Université du Québec à Montréal, in an interview with the Montreal Gazette. “We have to find a harmonious way to do that.” Dubé helped coin the term “erobotics”: “the interaction and erotic co-evolution between humans and machines.” In his thesis paper, Dubé presents a vision for “beneficial erobots” that prioritize users’ erotic well-being over developers’ financial interests. Such machines could help users break toxic social habits, navigate real-world relationships, and develop “a holistic view of human eroticism.”

In its current state, however, the technology is built to cater to market demand—and the trends are troubling. Not only are men more likely to use sex bots but female companions are being actively engineered to fulfill misogynistic desires. “Creating a perfect partner that you control and meets your every need is really frightening,” Tara Hunter, director of an Australian organization that helps victims of sexual, domestic, or family violence, told the Guardian. “Given what we know already that the drivers of gender-based violence are those ingrained cultural beliefs that men can control women, that is really problematic.”

Already, we’re seeing male users of Replika verbally abusing their femme bots and sharing the interactions on Reddit. The app’s founder, Eugenia Kuyda, even justified this activity. “Maybe having a safe space where you can take out your anger or play out your darker fantasies can be beneficial,” she told Jezebel, “because you’re not going to do this behavior in your life.”

What Kuyda has yet to address is the lack of adequate safeguards to protect user data on her app. Among other concerns, Replika’s vague privacy policy says that the app may use sensitive information provided in chats, including “religious views, sexual orientation, political views, health, racial or ethnic origin, philosophical beliefs” for “legitimate interests.” The company also shares, and possibly sells, behavioural data for marketing and advertising purposes. Users enter into relationships with AI companions on conditions set by developers who are largely unchecked by data regulation rules.

In February this year, internet security nonprofit Mozilla Foundation analyzed eleven of the most popular intimate chatbots and found that most may share or sell user data and about half deny users the ability to delete their personal details. Zoë MacDonald, one of the study’s authors, emphasized that apps currently don’t even meet minimum security standards like ensuring strong passwords or using end-to-end encryption. “In Canada, we have ‘marital privilege.’ You don’t have to testify in court against something that you said with your spouse,” MacDonald says. “But no such special condition exists for an AI-chatbot relationship.”

Another glaring red flag was the companies’ sneaky allusion to mental health services (for example, the Replika Pro version promises to be a tool for “improving social skills, positive thinking, calming your thoughts, building healthy habits and a lot more”). Look closely, and these apps are quick to deny any legal or medical responsibility for such uses. MacDonald suggests tech companies are exploiting the “creative leeway” granted in their marketing materials while sidestepping the strict norms that govern AI-driven mental health apps.

All in all, the rise of companion bots has given way to “a moment of inflection,” as Turkle put it at a recent conference. “The technology challenges us to assert ourselves and our human values, which means that we have to figure out what those values are.”The post When Your Lover Is a Bot first appeared on The Walrus.

The court decision released Friday upholds Canada’s Air Passenger Protection Regulations after a group of airlines appealed to have them declared invalid for international flights.

October 4, 2024 - 11:01 | Craig Lord | Global News - Canada

An analyst at the Pembina Institute, said the sort of investments Steel Reef is making are exactly what will be increasingly needed in the years to come.

October 4, 2024 - 10:59 | Globalnews Digital | Global News - Canada

The federal Fisheries Department says it is concerned about a "pattern" of violence and threats toward its enforcement officers after two tense incidents off southwestern Nova Scotia last month.

October 4, 2024 - 10:22 | The Canadian Press | CTV News - Canada

Comments

Be the first to comment